ARTICLE AD BOX

Image source, Alamy

Image source, Alamy

Samantha Morton (left) and Tom Cruise in the 2002 sci-fi film Minority Report, in which future technology helps catch people before they commit crimes

In an apocalyptic warning this week, big-name researchers cited the plot of a major movie among a series of AI "disaster scenarios" they said could threaten humanity's existence.

When trying to make sense of it in an interview on British television with one of the researchers who warned of an existential threat, the presenter said: "As somebody who has no experience of this… I think of the Terminator, I think of Skynet, I think of films that I've seen."

He is not alone. The organisers of the warning statement - the Centre for AI Safety (CAIS) - used Pixar's WALL-E as an example of the threats of AI.

Science fiction has always been a vehicle to guess at what the future holds. Very rarely, it gets some things right.

Using the CAIS' list of potential threats as examples, do Hollywood blockbusters have anything to tell us about AI doom?

'Enfeeblement'

Wall-E and Minority Report

CAIS says "enfeeblement" is when humanity "becomes completely dependent on machines, similar to the scenario portrayed in the film WALL-E".

If you need a reminder, humans in that movie were happy animals who did no work and could barely stand on their own. Robots tended to everything for them.

Guessing whether this is possible for our entire species is crystal-ball-gazing.

But there is another, more insidious form of dependency that is not so far away. That is the handing over of power to a technology we may not fully understand, says Stephanie Hare, an AI ethics researcher and author of Technology Is Not Neutral.

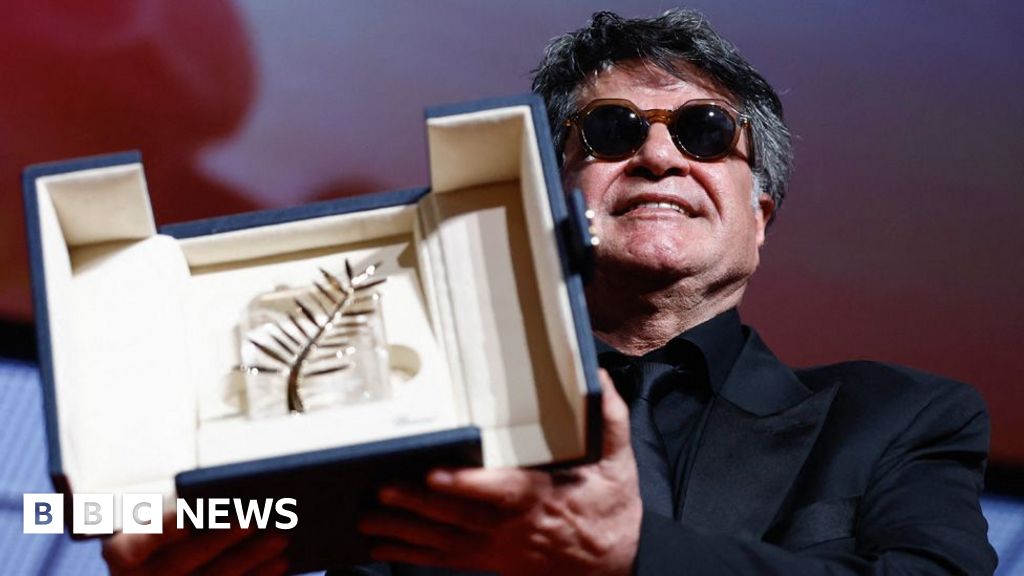

Think Minority Report, pictured at the top of this article. Well-respected police office John Anderton (played by Tom Cruise) is accused of a crime he hasn't committed because the systems built to predict crime are certain he will.

"Predictive policing is here - the London Met uses it," Ms Hare says.

In the film, Tom Cruise's life is ruined by an "unquestionable" system which he doesn't fully understand.

So what happens when someone has "a life-altering decision" - such as a mortgage application or prison parole - refused by AI?

Today, a human could explain why you didn't meet the criteria. But many AI systems are opaque and even the researchers who built them often don't fully understand the decision-making.

"We just feed the data in, the computer does something…. magic happens, and then an outcome happens," Ms Hare says.

The technology might be efficient, but it's arguable it should never be used in critical scenarios like policing, healthcare, or even war, she says. "If they can't explain it, it's not okay."

"Weaponisation"

The true villain in the Terminator franchise isn't the killer robot played by Arnold Schwarzenegger, it's Skynet, an AI designed to defend and protect humanity. One day, it outgrew its programming and decided that mankind was the greatest threat of all - a common film trope.

We are of course a very long way from Skynet. But some think that we will eventually build an artificial generalised intelligence (AGI) which could do anything humans can but better - and perhaps even be self-aware.

For Nathan Benaich, founder of AI investment firm Air Street Capital in London, it's a little far-fetched.

"Sci-fi often tells us much more about its creators and our culture than it does about technology," he says - adding that our predictions about the future rarely come to pass.

"Earlier in the twentieth century, people imagined a world of flying cars, where people stayed in touch by ordinary telephones - whereas we now travel in much the same way, but communicate completely differently."

What we have today is on the road to becoming something more like Star Trek's shipboard computer than Skynet. "Computer, show me a list of all crew members," you might say, and our AI of today could give it to you and answer questions about the list in normal language.

It could not, however, replace the crew - or fire the torpedoes.

Worries about weaponisation are around integrating AI into military hardware - something which is already on the cards - and whether we can trust the technology with life-and-death scenarios.

Naysayers are also worried about the potential for an AI designed to make medicine being turned onto new chemical weapons, or other similar threats.

"Emergent goals / deception"

Another popular trope in film is not that the AI is evil - but rather, it's misguided.

In Stanley Kubrick's 2001: A Space Odyssey, we meet HAL-9000, a supercomputer which controls most of the functions of the ship Discovery, making the astronaut's lives easier - until it malfunctions.

The astronauts decide to disconnect HAL and take things over themselves. But HAL - who knows things the astronauts don't - decides this jeopardises the mission. The astronauts are in the way. HAL tricks the astronauts - and kills most of them.

Unlike a self-aware Skynet, you could argue that HAL is doing what he was told - preserving the mission, just not the way he was expected to.

In modern AI language, misbehaving AI systems are "misaligned". Their goals do not seem to match up with the human goals.

Sometimes, that's because the instructions were not clear enough and sometimes it's because the AI is smart enough to find a shortcut.

For example, if the task for an AI is "make sure your answer and this text document match", it might decide the best path is to change the text document to an easier answer. That is not what the human intended, but it would technically be correct.

So while 2001: A Space Odyssey is far from reality, it does reflect a very real problem with current AI systems.

"Misinformation"

"How would you know the difference between the dream world and the real world?" Morpheus asks a young Keanu Reeves in 1999's The Matrix.

The story - about how most people live their lives not realising their world is a digital fake - is a good metaphor for the current explosion of AI-generated misinformation.

Ms Hare says that, with her clients, The Matrix us a useful starting point for "conversations about misinformation, disinformation and deepfakes".

"I can talk them through that with The Matrix, [and] by the end... what would this mean for elections? What would this mean for stock market manipulation? Oh my god, what does it mean for civil rights and like human literature or civil liberties and human rights?"

ChatGPT and image generators are already manufacturing vast reams of culture that looks real but might be entirely wrong or made up.

There is also a far darker side - such as the creation of damaging deepfake pornography that is extremely difficult for victims to fight against.

"If this happens to me, or someone I love, there's nothing we can do to protect them right now," Ms Hare says.

What might actually happen

So, what about this warning from top AI experts that it's just as dangerous as nuclear war?

"I thought it was really irresponsible," Ms Hare says. "If you guys truly think that, if you really think that, stop building it."

It's probably not killer robots we need to worry about, she says - rather, an unintended accident.

"There's going to be high security in banking. They'll be high security around weapons. So what attackers would do is release [an AI] maybe hoping that they could just make a little bit of cash, or do a power flex… and then, maybe they can't control it," she says.

"Just imagine all of the cybersecurity issues that we've had, but times a billion, because it's going to be faster."

Nathan Benaich from Air Street Capital is reluctant to make any guesses about potential problems.

"I think AI will transform a lot of sectors from the ground up, [but] we need to be super careful about rushing to make decisions based on feverish and outlandish stories where large leaps are assumed without a sense of what the bridge will look like," he warns.

1 year ago

117

1 year ago

117

English (US) ·

English (US) ·